Large Language Models (LLMs) are amazing, excelling at many tasks. But when it comes to machine translation (MT), traditional fine-tuning methods often cause a frustrating problem: catastrophic forgetting. The LLM gets better at translating, but loses some of its general knowledge and ability to perform other tasks. This is a big deal, especially for safety and instruction-following capabilities, which are often developed with proprietary data.

This research paper introduces a clever solution called RaDis (Rationale Distillation) that tackles this head-on. Instead of just training the LLM on translations, RaDis uses the LLM's own ability to generate rationales – basically explanations – for its translations. These rationales act as a form of "replay data," helping the LLM retain its general knowledge while learning new translation skills.

The core of RaDis is simple yet effective:

LLM Rationale Generation: The researchers observed that instruction-tuned LLMs often generate detailed rationales when asked to translate. These rationales contain general knowledge and safety principles.

Enriched Training Data: RaDis uses the LLM to generate these rationales for the training data's reference translations. These rationales are then combined with the reference translations to create an enriched dataset.

Combined Loss Function: The model is trained on this enriched dataset using a loss function that includes both a standard translation loss and a self-distillation loss on the rationale tokens. This self-distillation encourages the model to retain the knowledge encapsulated in the rationales.

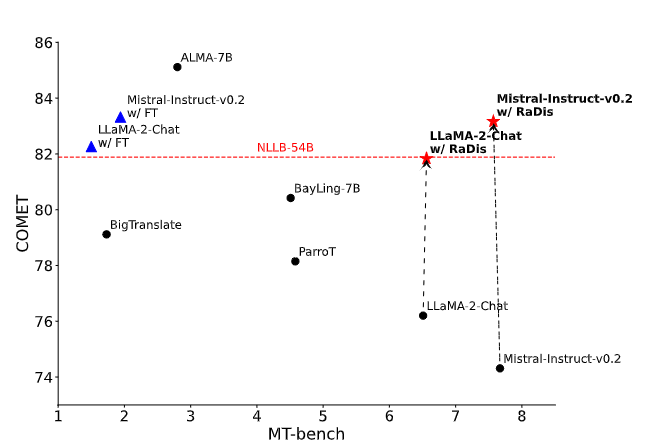

The researchers tested RaDis on two popular LLMs: LLaMA-2-7B-Chat and Mistral-7B-Instruct-v0.2. The results were impressive:

Significant Improvement in Translation: RaDis substantially improved translation performance compared to various baseline methods, including vanilla fine-tuning and other continual learning techniques.

Preservation of General Abilities: Importantly, RaDis did not cause a significant drop in the LLMs' performance on general ability benchmarks like MT-Bench, AlpacaEval, safety, and reasoning tasks. This confirms that RaDis effectively addresses catastrophic forgetting.

Ablation Study: The researchers conducted an ablation study to confirm the importance of using self-generated rationales – showing that using rationales from other LLMs wasn't as effective.

RaDis presents a promising new approach to fine-tuning LLMs for translation. By cleverly leveraging the LLMs' own ability to generate rationales, RaDis offers a path towards creating more versatile and robust LLMs that excel in specialized tasks without sacrificing their overall intelligence and safety. The research opens exciting avenues for future work in mitigating catastrophic forgetting and building more capable and reliable LLMs.