Large language models (LLMs) are incredibly powerful, but they suffer from a significant drawback: catastrophic forgetting. This means that when you train an LLM on a new task, it can lose its ability to perform well on previously learned tasks. This paper introduces Controlled LoRA (CLoRA), a new method designed to significantly reduce this forgetting while maintaining efficiency.

Catastrophic forgetting is a major hurdle in the continued training of LLMs. Existing methods like Low-Rank Adaptation (LoRA) improve parameter efficiency but don't fully address the forgetting problem. They constrain the rank of the update matrix but not its direction, which significantly influences how the LLM changes its output.

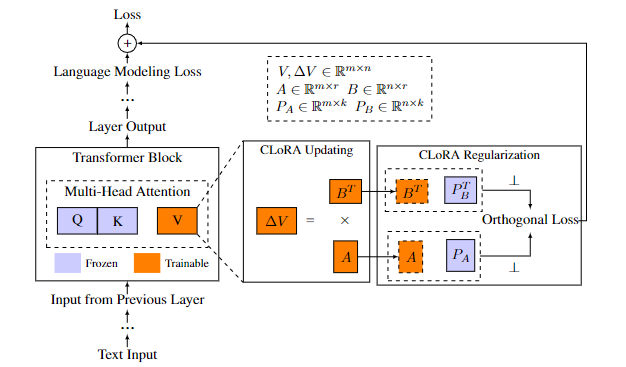

CLoRA cleverly addresses this limitation by adding a constraint on the direction of updates within the null space of the updating matrix. This constraint works by limiting the scale of output changes caused by updates, thus mitigating catastrophic forgetting without severely limiting model capacity. Think of it as guiding the LLM's learning in a more controlled manner.

The core idea is illustrated in the paper's Figure 1. By constraining the direction of updates within the null space, CLoRA ensures that the changes to the model's output are more focused and less likely to disrupt previously learned knowledge. This approach elegantly balances model capacity and the risk of forgetting.

The researchers used a rigorous experimental setup to evaluate CLoRA's performance. They compared it against several baseline methods, including standard LoRA, DORA, PiSSA, MiLoRA, and methods using rank reduction or L2 regularization. The experiments involved various benchmark datasets, including those focusing on commonsense and mathematical reasoning, allowing for both in-domain and out-of-domain evaluations. They measured performance using accuracy scores.

Importantly, they investigated the impact of different initialization methods for CLoRA's regularization matrices, exploring random initialization and SVD-based approaches. The optimal size (k) of the regularization matrix is shown to be task-dependent.

CLoRA consistently outperformed existing LoRA-based methods on both in-domain and out-of-domain evaluations. This clearly demonstrates its effectiveness in mitigating catastrophic forgetting. Analysis of model parameters confirmed that CLoRA effectively balances the trade-off between model capacity and forgetting, achieving a reduction in output changes without significantly compromising capacity.

CLoRA presents a significant advancement in parameter-efficient fine-tuning for LLMs. Its superior performance across various benchmarks and its ability to balance model capacity and forgetting make it a promising technique for enabling the continued training of LLMs without the pitfalls of catastrophic forgetting.

While highly effective, there's always room for improvement. Future work could explore: