ArXiv Paper Title:

Automatically Interpreting Millions of Features in Large Language Models

October 21, 2024

Keywords:

Large Language Models, Interpretability, Sparse Autoencoders, Natural Language Explanations

Read the paper on ArXiv

Unlocking LLMs: Automating the Interpretation of Millions of Features

Large language models (LLMs) are amazing, but understanding how they work remains a significant challenge. While individual neurons offer limited insight, sparse autoencoders (SAEs) provide a promising pathway to unlock their inner workings. SAEs transform the complex LLM activations into a higher-dimensional latent space – potentially easier for humans to interpret. However, these SAEs can have millions of latent features, making manual interpretation impossible. This research introduces an automated framework to tackle this challenge head-on.

The Automated Interpretation Pipeline: From Latent Features to Human-Understandable Explanations

This research paper details a novel, open-source framework designed to automatically generate and evaluate natural language explanations for the latent features learned by SAEs trained on LLMs. This innovative pipeline consists of several key steps:

- SAE Training: SAEs are trained on different LLMs (Llama, Gemma) using varied architectures, activation functions, and loss functions.

- Activation Collection: Latent activations are collected from the trained SAEs using a large text corpus (RedPajama-v2, Pile).

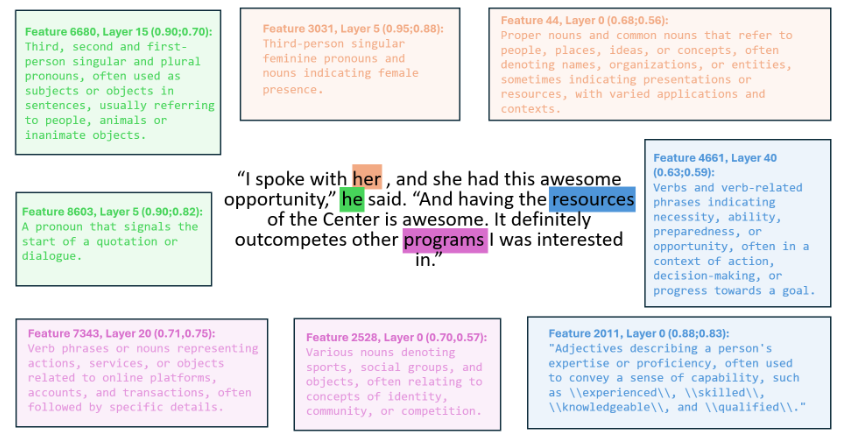

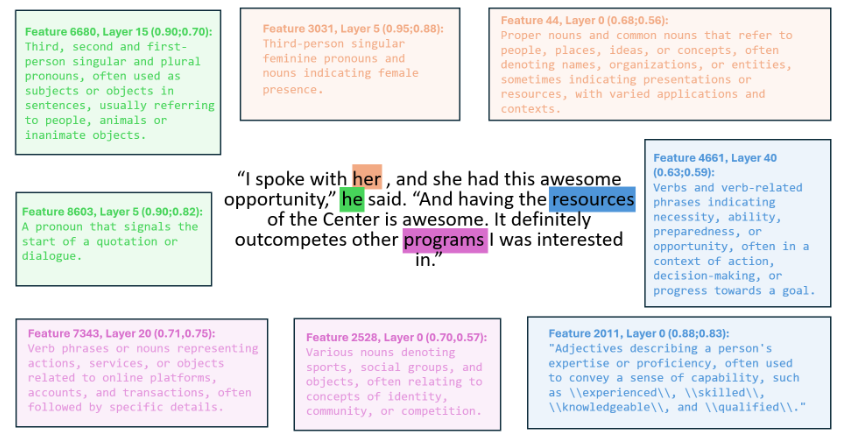

- Explanation Generation: LLMs (Llama 70b, Claude) generate natural language explanations for each latent feature, based on its activation patterns. Clever prompting techniques are used to maximize the quality and clarity of these explanations.

- Explanation Scoring: Five novel, computationally efficient scoring methods (Detection, Fuzzing, Surprisal, Embedding, Intervention) are introduced to assess explanation quality. These methods are compared to existing, more computationally expensive simulation-based methods. The new methods focus on the ability of the explanations to distinguish between activating and non-activating contexts.

- Semantic Similarity Analysis: The semantic similarity between independently trained SAEs across different LLM layers is measured using the Hungarian algorithm to align latent features and comparing their explanations.

The research yields several key findings:

- Superior Interpretability: SAE latents prove considerably more interpretable than individual neurons, even when neurons are sparsified using top-k selection.

- Efficient Automated Explanations: The LLM-based framework successfully generates millions of high-quality explanations.

- Effective Novel Scoring Methods: The new scoring methods show strong correlations with simulation-based methods, offering a more computationally efficient approach. Intervention scoring, in particular, reveals previously hidden features.

- Layer-Wise Semantic Consistency: SAEs trained on adjacent layers of the residual stream exhibit high semantic similarity, revealing a degree of consistency in feature representation.

Future Directions and Limitations

While the results are promising, certain limitations and potential areas for improvement exist:

- Bias in Scoring: The reliance on specific datasets and prompts for evaluation could introduce biases into the explanation scoring.

- Computational Resources: While more efficient than simulation methods, generating and evaluating millions of explanations still requires significant computational resources.

- Generalizability: The findings might not perfectly generalize to all LLMs, SAE architectures, or datasets.

- Subjectivity in Quality Assessment: Incorporating human evaluation of explanation quality could provide a more complete assessment.

Future research could focus on:

- Improving Explanation Quality: Refining prompting techniques and utilizing more advanced LLMs.

- Developing New Scoring Metrics: Exploring additional metrics to capture different aspects of explanation quality.

- Extending to Other Modalities: Applying the framework to other data types beyond text.

- Further Investigating Intervention Scoring: Expanding its application to different tasks and LLMs.

- Steering and Concept Localization: Leveraging the generated explanations to improve methods for steering and localizing concepts within LLMs.

This research represents a substantial step towards a deeper understanding of LLMs. By automating the interpretation of millions of latent features, this work opens exciting new avenues for research and application, paving the way for more interpretable and controllable AI systems. The authors have made their code and generated explanations publicly available, encouraging further exploration and development in this rapidly evolving field.