ArXiv Paper Title:

Can Large Language Models Invent Algorithms to Improve Themselves?

October 23, 2024

Keywords:

Self-Improving LLMs, Autonomous AI, Algorithm Invention, AI Development

Read the paper on ArXiv

Can LLMs Invent Algorithms to Improve Themselves?

This post explores a research paper investigating whether Large Language Models (LLMs) can autonomously create algorithms to enhance their performance. It's a fascinating look into the future of AI self-improvement!

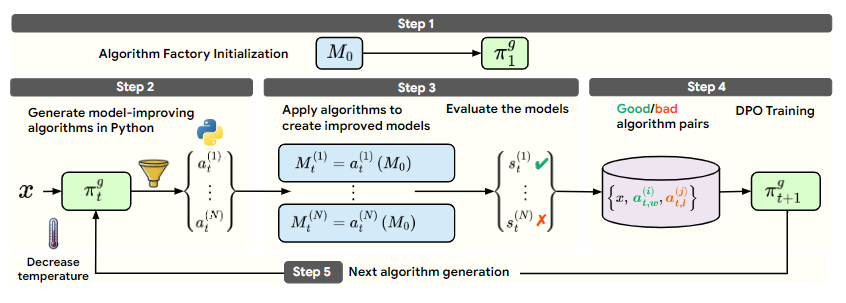

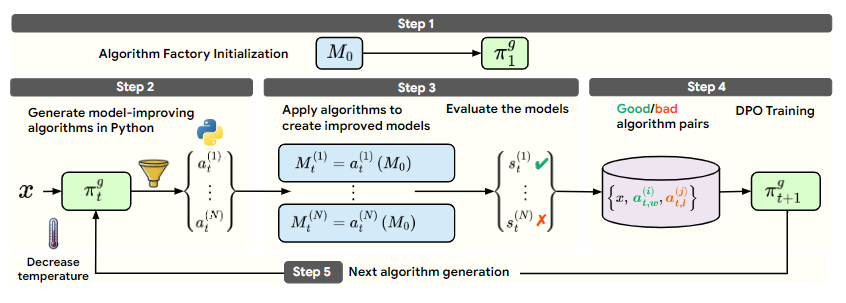

The paper introduces the "Self-Developing" framework. This framework lets an LLM (the "seed model") iteratively generate, test, and refine model-improvement algorithms. Think of it as an LLM creating its own personal trainer!

Here's the process:

- Algorithm Factory Initialization: A copy of the seed model is created, acting as an "algorithm factory."

- Algorithm Generation: The algorithm factory generates Python code for these algorithms.

- Algorithm Evaluation: The new algorithms are applied to the seed model, and the results are evaluated on benchmark datasets (GSM8k and MATH).

- Algorithm Factory Refinement: Direct Preference Optimization (DPO) refines the algorithm factory based on the results. Essentially, it learns from what works and what doesn't.

- Iterative Improvement: Steps 2-4 repeat, simultaneously improving both the seed model and the algorithm factory.

The results were impressive! The Self-Developing framework produced LLMs that outperformed the original seed model and even surpassed models improved by human-designed algorithms like Task Arithmetic and TIES merging. Specific improvements included:

- A 6% improvement on the GSM8k dataset.

- Strong transferability of the LLM-discovered algorithms to different, unseen models.

- Higher initial temperatures were beneficial in generating diverse algorithms, while temperature decay led to more stable performance.

Challenges and Future Directions

While exciting, the research also highlighted some challenges:

- Generalizability: The framework's effectiveness might be limited to certain tasks or LLMs. More research is needed.

- Computational Cost: The iterative process is computationally expensive.

- Algorithm Interpretability: Understanding exactly how the LLM-generated algorithms work is difficult.

- Bias and Safety: LLMs can inherit biases from their training data, a crucial concern for any self-improving system.

Future work could explore different optimization methods, apply the framework to other tasks, improve algorithm interpretability, and address bias and safety concerns. The potential is enormous!

This research demonstrates that LLMs can autonomously develop effective self-improvement algorithms, exceeding human-designed methods. The Self-Developing framework opens up exciting new possibilities for AI development with minimal human intervention, paving the way for more efficient and autonomous AI systems. It's a fascinating step towards truly self-improving AI!