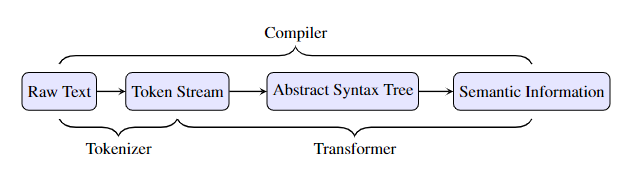

Large language models (LLMs) based on transformers have shown impressive results in various language tasks, including programming. This research paper delves into the potential of using transformers as compilers, a novel application that could significantly impact software development. The authors present a formal analysis comparing the efficiency of transformers to recurrent neural networks (RNNs) for compiler tasks. They introduce a new C-like language, Mini-Husky, and a domain-specific language, Cybertron, to facilitate their research.

To conduct their experiments in a controlled environment, the researchers developed Mini-Husky, a C-like programming language. This language includes key features of modern C-like languages, such as structs, enums, and strict type checking, making it well-suited for testing the capabilities of transformers as compilers. The choice of Mini-Husky allows for a focused investigation of the transformer's ability to handle core compiler tasks.

The study focuses on three core compiler tasks:

A key finding of the paper is that, under certain assumptions (bounded depth in the Abstract Syntax Tree (AST) and type inference), the number of parameters required by transformers to perform these compiler tasks scales logarithmically with the input sequence length. This is a significant improvement over RNNs, which exhibit linear parameter scaling. This logarithmic scaling implies that transformers are exponentially more efficient than RNNs for handling long code sequences, particularly relevant for large-scale projects.

To formally prove their theoretical results about the efficiency of transformers, the researchers developed Cybertron, a domain-specific language. Cybertron enables formal reasoning about the capabilities of transformer architectures in a way that is more straightforward than using traditional natural language proofs. This DSL assists in proving the logarithmic parameter scaling for transformers, contributing a novel approach to analyzing neural network architectures.

The research includes empirical validation using Mini-Husky. Experiments comparing transformers and RNNs on the three compiler tasks showed that transformers consistently outperform RNNs, especially as the input size increases. These results support the theoretical findings, demonstrating the practical advantage of transformers for compilation.

While the research presents compelling results, it also acknowledges certain limitations:

Future research could focus on relaxing the bounded depth assumption, evaluating the models on real-world code, and extending Cybertron's capabilities. Exploring different transformer architectures and applying these findings to other software engineering domains are also promising avenues for future work.

The paper provides strong evidence that transformers offer a significant efficiency advantage over RNNs for compilation tasks, particularly for well-structured code. The development of Cybertron highlights a promising new method for formally analyzing the capabilities of neural network architectures. The findings could have a major impact on the future of compiler design and software development.